If you've ever tailed logs for hours, scoured YAML configs like a detective, or stared blankly at an ImagePullBackOff at 2am, congrats. You've played the game of Kubernetes Roulette. And if you're reading this, you probably lost a round or two.

The Roulette of Modern Infrastructure

Kubernetes is incredibly powerful but troubleshooting it can feel like trying to debug a haunted house. The system is dynamic, distributed, and deeply intertwined with cloud primitives, networking rules, and container quirks. The result? A cascade of cryptic errors that often surface far from their root cause.

- A pod fails to start but the root issue is a typo in a secret mounted three layers deep.

- A service can't reach another but it's actually a forgotten NetworkPolicy.

- CPU is throttling but it's not the workload, it's the container runtime misbehaving.

These aren't just academic puzzles. In production, they burn time, erode confidence, and stall momentum.

And because Kubernetes doesn't forgive easily, junior engineers often hesitate to touch anything at all leaving the same few team members to shoulder the on-call burden again and again.

Strategies to Level Up (Without Burning Out)

There's no cheat code, but there are ways to build the reflexes and context you'll need before real-world stakes hit:

1. Play in a Sandbox

Spin up a local cluster with tools like kind or minikube. Break things on purpose:

- Deploy something with a missing container image

- Write a bad Deployment spec

- Remove a critical configMap

Then try to recover it. You'll build intuition fast.

2. Watch the Right Logs

Don't just rely on kubectl get pods, practice with:

kubectl describe(great for surfacing events and volume mounts)kubectl logs(for live app feedback)kubectl get events --sort-by=.metadata.creationTimestamp(a goldmine during chaos)

3. Pair Up

Kubernetes troubleshooting is a team sport. Run short "incident drills" with peers, use open-source projects or your staging environment as fodder. Remember to prioritize collaboration and communication over speed.

4. Learn the Failure Modes

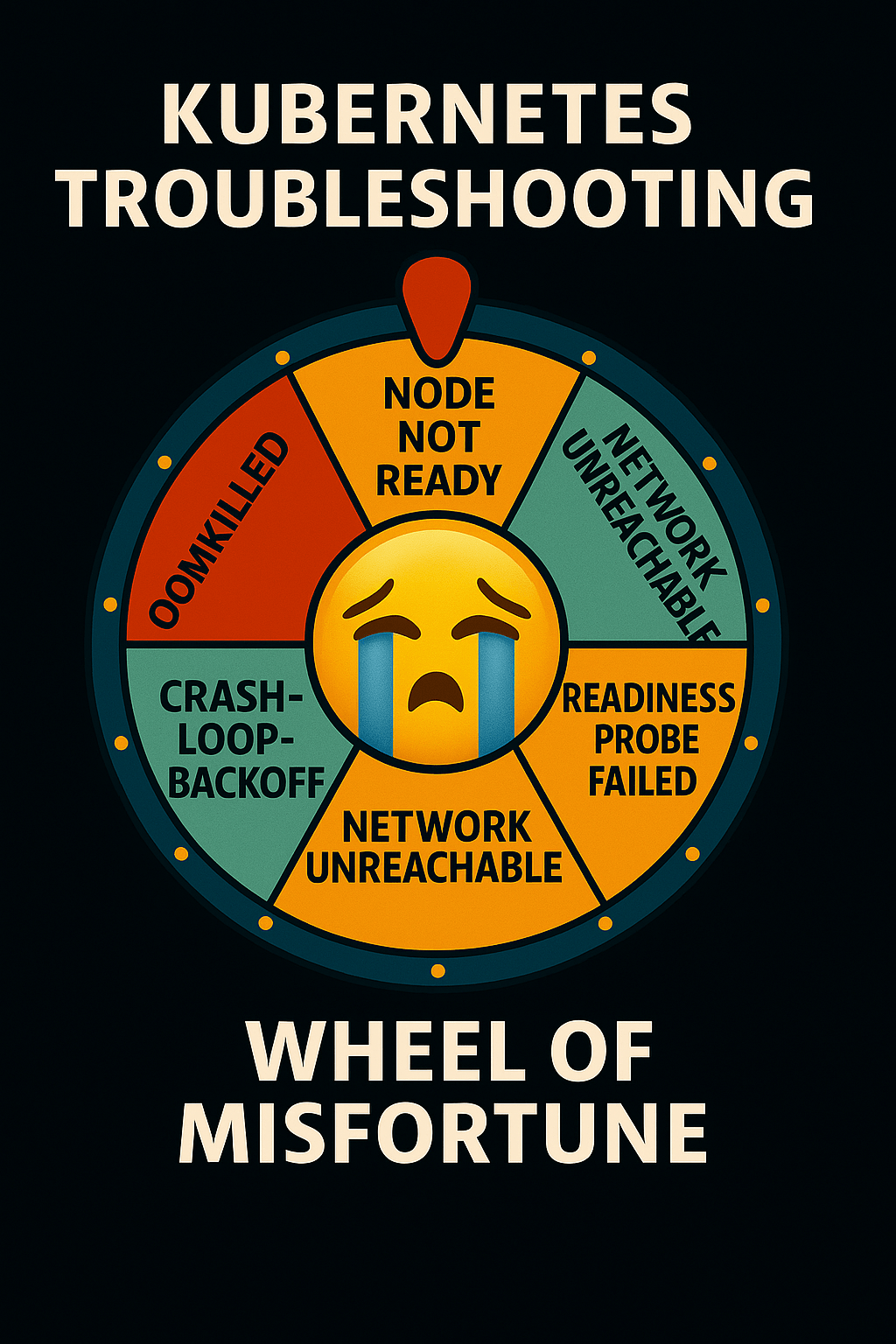

Common issues tend to cluster. Learn to recognize:

Image pull errorsCrashLoops vs OOMKillsReadiness vs Liveness probe failuresNode-level problems(disk full, networking)

You'll spot patterns faster and panic less.

5. Mentorship & Guided Learning

The best way to learn Kubernetes is by doing, but that doesn't mean throwing junior engineers into the deep end without a safety net. Here's how to create a supportive learning environment:

- Schedule on-call rotations during business hours when senior engineers are available to help

- Implement a shadow engineer program where junior engineers pair with experienced team members

- Set up automatic escalation policies that trigger after a reasonable troubleshooting window

- Create clear documentation of past incidents and their solutions

Remember: The best sailors are born in rough seas, but they need proper training and safety equipment first. Balance real-world experience with appropriate safeguards to build confidence and competence in your team.

StarOps: Your Kubernetes Sidekick (Or Hero, When It Counts)

Let's be honest, Kubernetes is not going to get easier on its own. But your team doesn't have to walk the tightrope blindfolded.

StarOps was built for teams who want to move fast without leaving their engineers burned out or buried in debugging sessions. It's your Kubernetes sidekick when things go well, and your hero when they don't.

We handle the plumbing, the observability, the integrations, so your team can stay focused on shipping and scaling, not chasing ephemeral errors.